Chapter 5 Joint, Marginal and Conditional Probabilities

5.1 Overview

In this chapter we’ll be looking more at joint and marginal probabilities, i.e. considering the relationship between two separate events, starting with the assumption of independence, and then working to relax that assumption.

We’ll then introduce the concept of conditional probability, where the likelihood of event B depends on whether event A is true (i.e. whether it happened). Next, we’ll introduce Bayes rule, which is an important tool for manipulating conditional probabilities. And finally, we’ll discuss probability trees, which can be a useful method to visualize conditional probabilities.

Of note, in our previous chapter we worked on examples where we knew the probabilities explicitly. That won’t always be the case here and instead we’ll often use observed proportions as probabilities.

5.1.1 Learning Objectives

By the end of this chapter you should be able to

- Create and use a contingency table based on probabilities or counts. Calculate and fill in missing values.

- Define joint and marginal probabilities and calculate both

- Explain how joint and marginal probabilities appear in contingency tables

- Define conditional probability and give examples where it might be useful

- Use algebra to derive the relationship between joint, marginal and conditional probabilities

- Explain and use the sum of conditional probabilities rule and the general multiplication rule

- Demonstrate how to evaluate if data exhibit independence

- Define Bayes’ rule and calculate conditional probabilities using Bayes’ rule

- Draw and use tree diagrams to represent conditional probabilities

5.2 Joint and Marginal Probabilities under Independence

Previously we discussed the probability of randomly drawing a spade and a face card. This is known as the joint probability.

Taking a step back, each card has multiple attributes or characteristics, or variables for lack of a better word that are associated with it. Two are obvious: color and value.

And this is true for other things too. People can be R vs. D and drive an electric car or not. They can be under or over 21 and can use social media more or less than 10 hrs per week. Etc., etc.

So, back to our card example, what is the probability a randomly drawn card is a diamond and 5 or below (including the Ace)? In particular it’s the AND here that indicates we’re calculating a joint probability. Similar to what we did previously, we can write this in a 2x2 table as:

| card | P(\(<=5\)) | P(\(>5\)) | Total |

|---|---|---|---|

| P(diamond) | 0.25 | ||

| P(!diamond) | 0.75 | ||

| Total | 0.384 | 0.616 |

where this table now contains the probabilities instead of the counts. The values on the edges and bottom are known as the marginal probabilities.

Now you go ahead and fill out the rest of this table, assuming independence between card color and value.

To get you started, note that under the condition of independence, we know: \(P(A\ and\ B) = P(A)*P(B)\)

So, \(P(diamond\ and <=5) = P(diamond))*P(<=5) = 0.25*0.384 = 0.096\). There are 5/52 cards that meet this condition = 0.096.

.

.

.

.

Here is the complete table:

| card | P(\(<=5\)) | P(\(>5\)) | Total |

|---|---|---|---|

| P(diamond) | 0.096 | 0.154 | 0.25 |

| P(!diamond) | 0.288 | 0.462 | 0.75 |

| Total | 0.384 | 0.616 |

For any row or column, we find the sum equals its parts, such as: \(P(diamond) = P(diamond\ and\ <=5) + P(diamond\ and\ >5)\), right? Said differently, the marginal probability is the sum of the appropriate joint probabilities.

5.2.1 Guided Practice

Create a 2x2 table and determine both the joint and marginal probabilities if \(A\) is the event that a family at EPS owns an electric car and \(B\) is the event that they have more than one student at EPS. Let \(P(A) = 0.2\) and \(P(B) = 0.15\), and assume that these events are independent. Find the four joint probabilities and the two missing marginal probabilities.

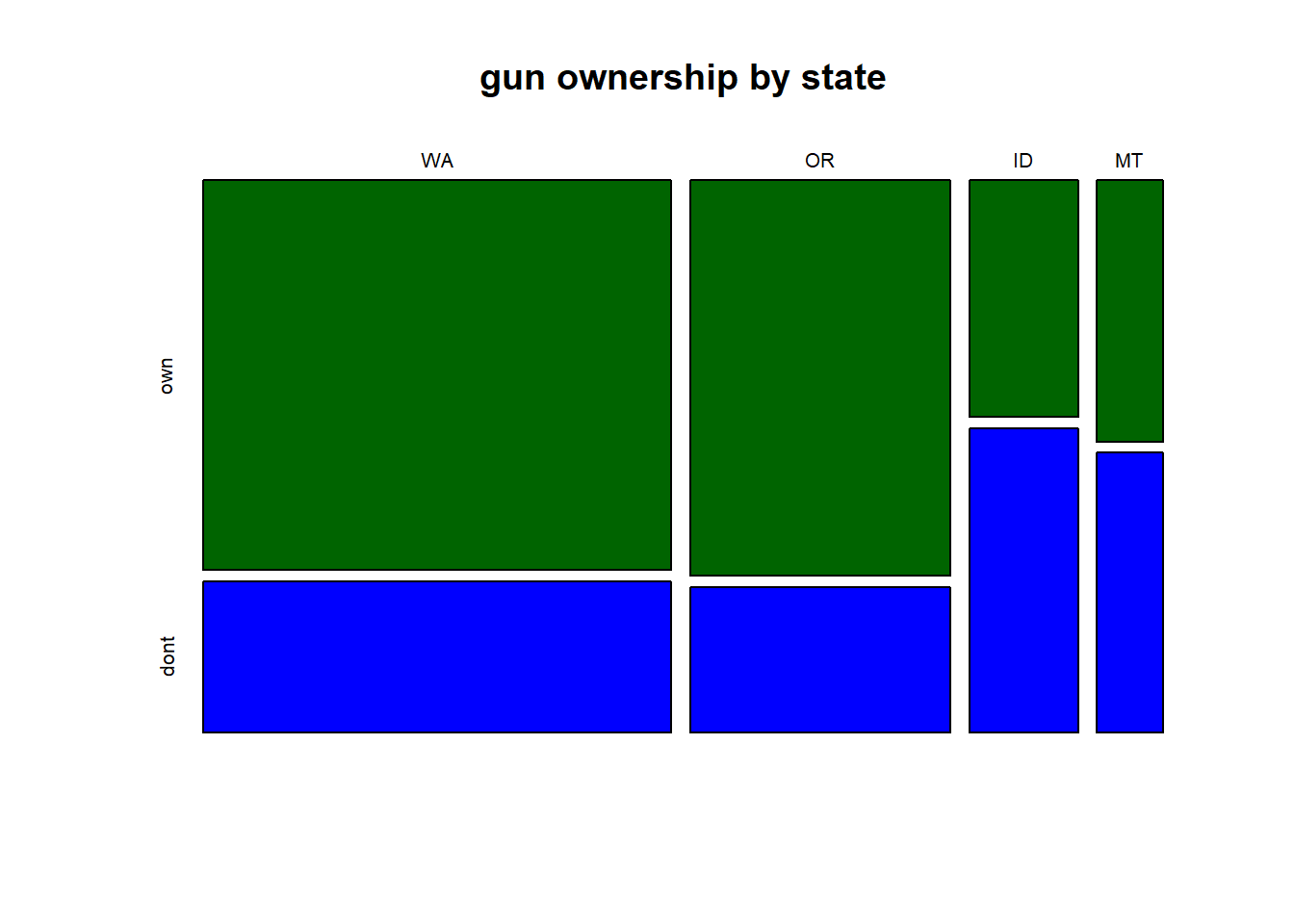

Let’s look at the relationship between state population and gun ownership in the PNW (which I’ll define to include WA, OR, ID and MT). Of the overall population, the breakup by states is roughly: WA 52%, OR 29%, ID, 12%, MT 7%. So if we randomly sampled people in the PNW, there’s a 52% chance they’d be from WA. And, for the region as a whole, 67% don’t own guns and 33% own 1 or more gun.

- Suppose two people are selected at random. What is the probability that both are from WA? What is the probability that neither are from WA?

- Supposing state and gun ownership are independent, what is the probability that random person is from OR AND owns one or more guns?

- Supposing state and gun ownership are independent, what is the probability that random person is not from WA AND doesn’t own a gun?

| num of people | WA | OR | ID | MT | total |

|---|---|---|---|---|---|

| don’t own a gun | 0.67 | ||||

| own one or more gun | 0.33 | ||||

| total | 0.52 | 0.29 | 0.12 | 0.07 |

In other words, here I’ve given you the marginal probabilities, so can you fill out a table with the joint probabilities?

- What is the relationship between independence and joint probabilities? (Discuss)

5.2.2 Finding Joint and Marginal Probabilities

So now, let’s suppose I gave you the information the other way. If we are given a joint probability and a marginal probability, how would we find the other marginal probability?

For example, let’s look at event \(A\) as “do you play sports?” and event \(B\) as “do you live in Bellevue?” Assume these are independent.

If we know that the P(play sports and live in Bellevue) = 12% and the probability that you play sports is 40%, what is the probability you live in Bellevue? At first it may seem like we don’t have enough information to solve this, but in face, we do!

Here’s the 2x2 table to start.

| P | play | don’t play | total |

|---|---|---|---|

| Bellevue | 0.12 | ||

| ! Bellevue | |||

| total | 0.40 |

Can you fill out the rest of this table? (Hint: Yes, if you rely on our assumption of independence, use our rule that \(P(A\ and\ B) = P(A)*P(B)\) and if you remember the complement rule.)

.

.

.

.

My approach is to:

- First fill in the cell of \(P(A\ and\ !B) = 0.40-0.12 = 0.28\), converting from the marginal to the missing joint probability.

- We can also calculate the missing marginal probability, \(P(!A) = 1 - 0.4 = 0.6\).

- Next, I can arrange multiplication rule as \(P(B) = P(A\ and\ B) /P(A)\), and using this allows me to know the \(P(B) = \frac{0.12}{0.4} = 0.3\).

- Finally, I can fill in the rest of the table, quickly finding that \(P(!B) = 0.7\), \(P(A\ and\ B) = 0.6*0.3 = 0.18\) and \(P(!A\ and\ !B) = 0.6*0.7 = 0.42\)

| P | play | don’t play | total |

|---|---|---|---|

| Bellevue | 0.12 | 0.18 | 0.3 |

| ! Bellevue | 0.28 | 0.42 | 0.7 |

| total | 0.40 | 0.6 |

Of importance, note that all the joint probabilities sum to 1.

5.2.3 Converting between Marginal and Joint Probabilities

We used this rule above, but didn’t discuss the algebra.

\(P(A) = P(A\ and\ B) + P(A\ and\ !B)\)

What does this say and why/when is it true? Does it depend on the assumption of independence?

Using the above example, the probability that I live in Bellevue is (i) the probability that I live in Bellevue AND I play sports + (ii) the probability that I live in Bellevue AND I don’t play sports.

5.2.4 Guided Practice

For the following two events A and B, fill in the missing values, assuming independence:

| P | A | !A | total |

|---|---|---|---|

| B | 0.1 | ||

| !B | |||

| total | 0.35 |

5.3 Evaluating Joint Probabilities without Independence

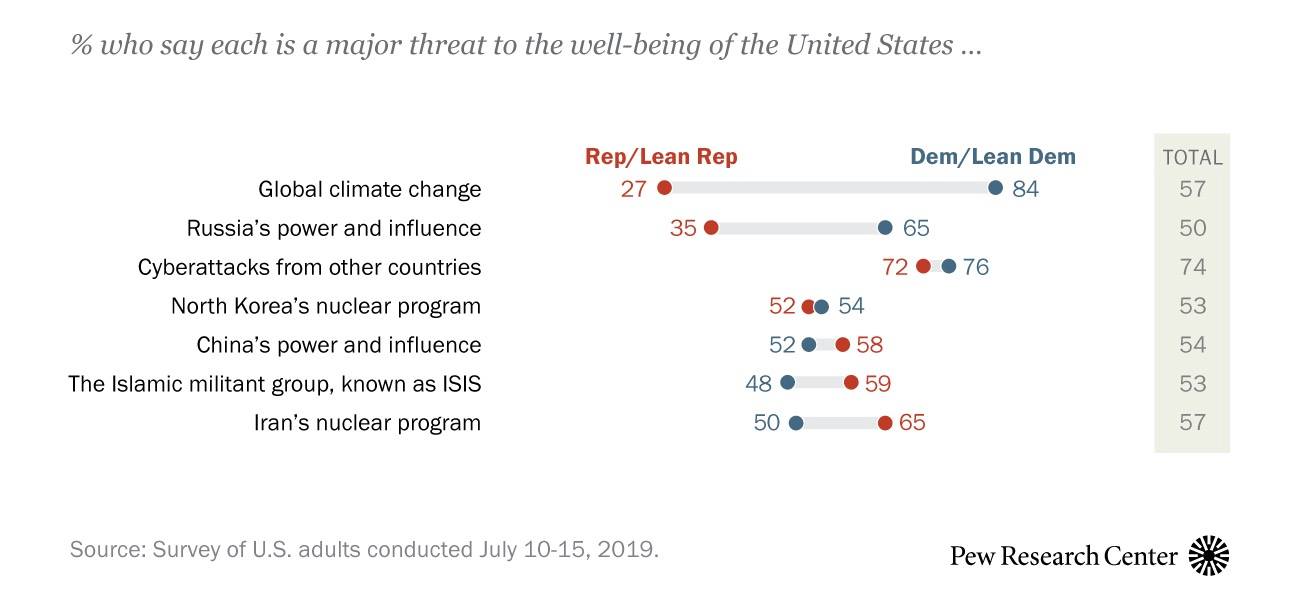

A Pew Research poll asked people about their beliefs on a range of issues and their political leanings. What do you see?

Figure 5.1: Results from a Pew Research poll asking people about their beliefs on a range of issues and their political leanings.

What does this data show? Do you think there’s independence between political affiliation and beliefs? Probably not, and in this section we’ll evaluate how to fill in 2x2 contingency tables when the assumption of independence does NOT hold.

Let’s now assume 1307 Americans were specifically asked: “Is there solid evidence that the average temperature on Earth has been getting warmer over the past few decades?” (Modified from problem 3.15 in OpenIntro Stats)

A useful way to display the results is through a contingency table:

| /# | Earth is Warming | Not Warming | Total |

|---|---|---|---|

| Republican | 222 | 379 | 601 |

| Democrat | 562 | 144 | 706 |

| Total | 784 | 523 | 1307 |

What does this table show you? Can you calculate:

- The probability that a random person was a Republican?

- The probability that a random person believes the Earth is warming?

- Whether these are independent? (Probably not, right?)

In fact I’m not sure our brains are that good at looking at this and easily understanding it. So, let’s dive a little deeper, and look at the same data written as proportions.

5.3.1 Converting from Counts to Proportions

Here is the table from above as marginal and joint proportions, which we’ll manipulate similar to probabilities:

| P | Earth is Warming | Not Warming | Total |

|---|---|---|---|

| Republican | 0.17 | 0.29 | 0.46 |

| Democrat | 0.43 | 0.11 | 0.54 |

| Total | 0.60 | 0.40 | 1.00 |

First, how did I calculate these values? Note that 0.17 = 222/1307 and 0.46 = 601/1307, so in this case we’re dividing each observed count by the total number of responses. That’s the only way to make sure the joint probabilities sum to 1.

And so, here we’ll use observed proportions to represent probabilities under the assumption that the result are representative of our population.

Q: So, what does the value 0.11 represent?

.

.

.

.

It’s the the joint probability of someone being in the “Democrat AND Not Warming” group, and note that these probabilities refer to the whole group (the numbers in red). As we saw above, the whole probability distribution must add to 1 and here we see \(0.17+0.29+0.43+0.11 = 1\).

Now, what if we wanted to think about events A or B in isolation? The marginal probability of P(Earth is warming) = 0.60. This represents the overall probability of believing the Earth is warming for the whole group. In our table this is found by adding the two sub groups that believe the Earth is warming. (i.e. the blue numbers in the first column.) As a reminder, marginal probabilities occur both in columns and along rows.

Q: Does this mean that 43% of Democrats believe the Earth is warming?

No, it means that 43% of our whole sample fall in the bucket of both being Democrats AND believe the Earth is warming. Is this clear?

So to review, marginal probabilities are __________________ and joint probabilities are __________________. Choose one:

- on the outside edge (typically right or bottom)

- in the center of the table

5.3.2 Notation for Joint and Marginal Probabilities

For the above table, to make our lives a little easier, let’s define:

- Let \(A\) be the event if a person is Republican, and so \(!A\) is if the person is Democrat

- Let \(B\) be the event if the person believes the Earth is warming, and hence \(!B\) is if the person does not believe the Earth is warming.

Q: If I write \(P(A) = P(A\ and\ B) + P(A\ and\ !B)\) how would you put that into words in this specific example?

Again, this is how we convert from joint to marginal probabilities.

- What is \(P(B)\) in this same format (i.e. as a function of joint probabilities)?

- What is \(P(!A)\) in this same format (i.e. as a function of joint probabilities)?

So in general, (even without the assumption of independence) we can convert between joint and marginal probabilities using this equation. In words we can say that the marginal probability is the sum of the associated joint probabilities. And again, this is true regardless of whether events A and B show independence.

Lastly, in the case where we have independence, we can also use our Multiplication Rule for Independence, namely \(P(A\ and\ B) = P(A)*P(B)\) to convert between marginal and joint probabilities.

5.3.3 Do Observed Probabilities Exhibit Independence?

Now let’s answer the question about whether the above data exhibit independence. If party affiliation and beliefs on global warming were independent, what counts would we expect?

| P | Earth is Warming | Not Warming | Total |

|---|---|---|---|

| Republican | 0.17 | 0.29 | 0.46 |

| Democrat | 0.43 | 0.11 | 0.54 |

| Total | 0.60 | 0.40 | 1.00 |

To evaluate this we can look at any of the cells and compare the observed proportions with the predicted proportions if independence were true.

For example, if we look at the cell which shows the proportion who are Republican and don’t believe in warming, we see a value of 0.29. If being a Republican were independent of beliefs about warming, then we would expect \(0.46*0.40 = 0.184\) proportion of the responses to fall in this cell.

This difference suggests that party affiliation and beliefs on global warming are NOT independent.

5.3.4 \(\chi^2\) GOF Testing

So I assume you’re all wondering, OK how would we really know independence about or not??? There must be a better way, right? That’s a little ahead of where we are (yet), but we’ll get there, probably in the second trimester. To summarize, we’ll look at how far away our data are from the expected result. And then evaluate how likely that is based on randomness alone. If the chance of seeing data that extreme assuming \(H_0\) is true, is less than 5%, we’ll reject our hypothesis of independence. I can say more, but we can also wait…

5.3.5 Guided Practice

- This following data comes from Stanford Open Policing Project (https://openpolicing.stanford.edu/) which we’ll do more with later in the year. Let’s imagine we have two events concerning people who are pulled over by the police. We’ll let event A be whether the person is White or not and event B be whether the person is subsequently searched.

| p | A | !A | total |

|---|---|---|---|

| B | 0.20 | ||

| !B | 0.35 | ||

| total | 0.55 |

- Use what you know about the relationship between marginal and joint probabilities to fill in the rest of the table, and make sure not to assume independence at this stage.

- Does the assumption of independence between race and subsequent search seem appropriate here?

- Let’s suppose we’re looking at social media use by age and take a random sample of 1000 people with cell phones in WA state. We categorize people between 13-25 and 26 and above (old people) and also ask how many hours per week they spend on their phones on social media (less than 10 or more than 10). Before looking at the data, would you expect age and hours on social media choice to be independent? Why or why not?

- The data for this problem is shown below:

| age | P(\(<=10\) hrs) | P(\(>10\) hrs) | Total |

|---|---|---|---|

| P(13-25) | .10 | .45 | 0.55 |

| P(26+) | .20 | .25 | 0.45 |

| Total | 0.30 | 0.70 |

Does this table show independence? Why or why not?

Given \(P(A) = P(A\ and\ B) + P(A\ and\ !B)\), fill in the following blanks:

P(26+) = P(26+ & \(<=10\) hrs) + ______________

P(\(<=10\) hrs) = __________ + ____________

And note, if you had any two (2) of these, you could find the third, right?

- Prove to yourself that the calculation of expected values in each cell using multiplication of proportions and of counts is the same. Explain why.

5.4 Conditional Probability

In this section, we’re going to introduce the idea of conditional probability, which describes a situation where we’re are calculating probabilities for an event, under the assumption that another event has already happened.

For example, continuing with our example from before, if someone is a Republican, what is the probability they believe the Earth is warming? (Alternatively we might say ‘given’ they’re a Republican…) Here are our two tables from before:

With counts:

| /# | Earth is Warming | Not Warming | Total |

|---|---|---|---|

| Republican | 222 | 379 | 601 |

| Democrat | 562 | 144 | 706 |

| Total | 784 | 523 | 1307 |

With proportions:

| P | Earth is Warming | Not Warming | Total |

|---|---|---|---|

| Republican | 0.17 | 0.29 | 0.46 |

| Democrat | 0.43 | 0.11 | 0.54 |

| Total | 0.60 | 0.40 | 1.00 |

Note, I’m asking the question a different way here, right? Here, we know something to be true, namely that the person is a Republican. That is the ‘given’. What I’m now asking is “what is the probability that someone drawn only from the subgroup of Republicans believes the earth is warming?”

To answer this, let’s just look at the first row of the upper table. We know that the person is a Republican, so for this question, we can ignore the second row. Now, what is the chance they believe the Earth is warming?

For our notation, we write this using the vertical bar “|”, specifically as \(P(B|A)\), which we read as “the probability of B given A”.

Well, in the first table we see there are 601 total Republicans and 222 of those believe the Earth is warming, so the \(P(B|A) = 222/601 = 0.37\).

Using our probability notation, we’ve calculated this as \(P(B|A) = \frac{P(A\ and\ B)}{P(A)}\), namely the joint probability divided by the “given” marginal probability.

We could have also found this in the second table, using the proportions, where we equivalently find \(0.17/0.46 = 0.37\).

And note that the 0.37 doesn’t appear in our table!

This is known as conditional probability and again we write this as: \[P(B|A) = \frac{P(A\ and\ B)}{P(A)}\]

And with some simple math, I can also rearrange this equation as:

\[P(A\ and\ B) = P(B|A)* P(A)\] which is somehow easier for me to remember. Note to get here, I just multiplied both sides by \(P(A)\). Also note that I can interchange events \(A\) and \(B\) as needed.

Q: So, if someone believes the Earth is warming, what is the probability they are a Democrat? How would you write this in probability notation? What is the equation? What is the result?

A: In this case we’re only looking at the first column (earth is warming), and out of those 784 people, 562 are democrats, so 71.7%. In probability notation, we’d write this as: \(P(!A|B) = P(!A\ and\ B)/P(B) = \frac{0.43}{0.60}\).

5.4.1 Guided Practice

Let’s suppose we take a representative sample of the population and find that the probability of people having at least basic health coverage is 85%, and we find that the probability of an individual having a medical emergency within 12 months is 10%. (We’ll define an emergency as something for which they need to go to the doctor.)

Also let’s suppose that the probability of a random person both not having basic health coverage and having a medical emergency is 1%.

- Define your events, A and B.

- What are the marginal probabilities of having/not having basic health coverage, and of having/not having a medical emergency? Write these using probability notation.

- What is the joint probability of having basic health coverage and having an emergency? Write this using probability notation and give the answer.

- Create a 2x2 table and calculate/fill in the missing joint probabilities.

- What is the (conditional) probability of having an emergency given one doesn’t have basic health coverage? Write this using probability notation and give the answer.

- What is the (conditional) probability of not having basic health care given one has an emergency? Write this using probability notation and give the answer.

- Are these two events (health care and medical emergency) independent? How do you know? (Think both qualitatively and quantitatively)

- What would the joint probabilities be if the events were independent?

- What does the difference between (h) and (d) tell you? What’s the story here?

5.4.2 Conditional Probability vs. Independence

Above we defined conditional probability as \(P(A\ and\ B) = P(B|A)* P(A)\) which we said was always true.

But, do you remember our multiplication rule for independent events? It was \(P(A\ and\ B) = P(B) * P(A)\).

So, given these are both true under different assumptions, what does this tell us about the relationship between these equations?

First look at the equations. The only difference is the first term, \(P(B|A)\) vs \(P(B)\). In words, what is the difference between these two?

If A and B are independent, what is \(P(B|A)\)? It has to be that \(P(B|A) = P(B)\), right? So given A, (i.e. knowing if A happens or not), we don’t have any more information about whether B happens, so it doesn’t change the probability of B. So this makes sense (I think!?)

5.4.3 Sum of Conditional Probabilities

One last important point to cover here is the idea of the sum of conditional probabilities. We can write:

\[P(A|B) + P(!A|B) = 1\]

What this says is that conditional on B, (namely once we know that B has already happened), one of those two outcomes (A or !A) then has to happen, and so those to probabilities have to sum to 1.

And why is this true? Can we derive it? Go back to our 2x2 table.

Start with converting both terms back to their joint and marginal probabilities: \(\frac{P(A\ and\ B)}{P(B)} + \frac{P(!A\ and\ B)}{P(B)}\)

Then, combine these into a single fraction over the same denominator: \(\frac{P(A\ and\ B)+P(!A\ and\ B)}{P(B)}\)

Next, look carefully at the numerator. Since \(A\) and \(!A\) are the only two possibilities, \(P(A\ and\ B)+P(!A\ and\ B)\) is simply \(P(B)\)

Hence, we are left with \(\frac{P(B)}{P(B)} = 1\) as expected.

5.4.4 Review of Conditional Probabilities

The general idea behind conditional probabilities is that for two events that are NOT independent, knowing whether one happened may impact the probability that the second does (or doesn’t happen).

We write this as P(A|B) and say “the probability of A given B”.

We’ve learned a couple of important definitions for conditional probabilities:

\(P(B|A) = \frac{P(A\ and\ B)}{P(A)}\)

\(P(A|B) + P(!A|B) = 1\)

And of course \(A\) and \(B\) are interchangeable here.

5.4.5 Optional: The Relationship between Conditional, Joint and Marginal Probabilities

We previously defined the conditional probability as \(P(B|A) = \frac{P(A\ and\ B)}{P(A)}\).

But remember how we can convert between joint and marginal probabilities? In particular, we might write \(P(A) = P(A\ and\ B)+P(A\ and\ !B)\). If we substitute this in the denominator above, we’d have

\[P(B|A) = \frac{P(A\ and\ B)}{P(A)} = \frac{P(A\ and\ B)}{P(A\ and\ B)+P(A\ and\ !B)} \]

(Note, this is also the (# of cases where A & B) / [(# of cases where A & B) + (# of cases where A & !B)] where the denominator is all the cases where A is true, i.e. all Republicans - regardless of whether they believe the Earth is warming or not.)

One of the points here is for you to get familiar and comfortable with manipulating probabilities. It’s “just” algebra.

5.5 Bayes Rule

What we’re going to cover next is going to have some possibly surprising results. We’re going to exploit conditional probabilities in a useful way.

Who was Thomas Bayes (1701-1761)?

For those interested in Biostatistics (i.e. application of statistics to medicine, etc.), this may be one of the most important thing you learn this year. And we’re just going to briefly cover it, but it could definitely be part of a term project. And it has more applications than just that.

And, there’s a whole branch of statistics known as Bayesian Statistics that is built on this theory.

5.5.1 The motivation behind Bayes Rule

Suppose we’re thinking about COVID-19, and in particular testing for whether a person has the disease. Using conditional probabilities, could have

- P(patient has a disease | his/her test is positive), or

- P(test is positive | patient has a disease)

Can someone explain the difference between these two? (Discuss) Which is easier to figure out? Which do we really want to know? What are the associated joint and marginal probabilities?

.

.

.

.

.

As an aside, it’s probably worth discussing the concepts of false negatives and false positives. So, meaning that for the first bullet above, there’s some chance that:

- the patient has a disease when the test is negative (false negative)

- the patient doesn’t have the disease when the test is positive (false positive)

And hence, there can be uncertainty in the test results that we might like to characterize.

Anyway, the genius of Bayes’ rule is that it allows us to translate between the above two conditional probabilities, where one is easier to figure out than the other, and sometimes that’s the one we really care about.

5.5.2 Bayes Rule Equation

The algebra here is fairly straight forward. From before we had defined our conditional probability as:

\[P(B|A) = \frac{P(A\ and\ B)}{P(A)}\]

But there’s nothing really special about the order of A and B as I’ve said. And with some simple math I can also write: (where I’ve switched the order of the events)

\[P(A\ and\ B) = P(A|B)* P(B)\]

And so now, I can combine these, but replacing the numerator of the first with the second to give:

\[P(B|A) = \frac{P(A|B)* P(B)}{P(A)}\]

This result is known as Bayes’ Theorem or Bayes’ rule, which, as you can see, allows us to easily translate between \(P(A|B)\) and \(P(B|A)\), which is what we wanted to do above. Let’s do an example…

5.5.3 Test Sensitivity

Let’s suppose there is a certain disease, the probability of which an adult male in the general populations of having is \(P(D) = 0.04\). There is a test \(T\) which can indicate if someone has the disease, but its not perfect. And overall, let’s assume \(P(T) = 0.038\), which is the probability of testing positive in the general population for both people who do and don’t have the disease. Finally, let’s assume \(P(T|D) = 0.9\), which is the probability of testing positive given one has the disease.

We want to know \(P(D|T)\), namely the probability someone has the disease, given the test came back positive.

From before, using Bayes Rule we can write (changing the variables): \(P(D|T) = \frac{P(T|D)P(D)}{P(T)}\) which then allows us to calculate the probability someone has the disease based on \(P(T|D)\), the probability the test comes back positive if they have the disease, \(P(D)\) the overall level of the disease in the population, and \(P(T)\), the overall probability of testing positive (regardless of whether you have the disease).

Plugging our values in we find:

\[P(D|T) = \frac{P(T|D)P(D)}{P(T)} = \frac{0.9*0.04}{0.038} = 0.95\]

which means, the probability of having the disease, given the test is positive, is 95%. This is a good number, but it also means that not everyone who tests positive for the diseases actually has it.

Again, the brilliance of this is that it allows us to use \(P(T|D)\), which is easily measurable in lab tests, to determine \(P(D|T)\), which is what we really want.

5.5.4 Guided Practice

What if you were able to increase the sensitivity of the above test so that the probability of testing positive given a patient had the disease P(T|D) was 0.93, assuming P(T) remained unchanged. How would this change P(D|T)?

The following data are about Smallpox inoculation and deaths in Boston from 1721. 3.9% of the people were inoculated. Out of the whole population exposed to the disease, 13.7% died. 2.46% of the people who were inoculated, died. Given someone died, what is the probability they were inoculated?

5.5.5 How would we get the data?

The above example only works if we can get reasonable estimates of each of the variables. We need:

- \(P(T|D)\), the probability the test comes back positive if they have the disease,

- \(P(D)\) the overall level of the disease in the population, and

- \(P(T)\), the overall probability of testing positive (regardless of whether you have the disease).

The first of these is doable. We can take 1000 people we know have the disease and test them. The second of these relies on statistical sampling, which we’ll discuss further later in the year, but suffice it to say we can at least estimate this. The third is also available, particularly when we break the denominator apart, which we will do shortly.

5.5.6 A More Complicated Version

In fact, we often don’t know \(P(T)\). We instead have data about how good a test is at detecting a disease. Such as \(P(T|D)\) and \(P(T|!D)\). We can estimate both of these values by doing experiments on people known to have the disease or not.

Being the good statistics students we are, we know we can write \(P(T) = P(T\ and\ D) + P(T\ and\ !D)\). Why is this true? Think back to our 2x2 table and note that we’re just converting joint probabilities into marginal probabilities.

Extending this, we can use the relationship between joint and conditional probabilities to write \(P(T)= P(T|D)*P(D) + P(T|!D)*P(!D)\)

In words, what we’re doing is looking at the ways that the test could come back positive, \(P(T)\). It could either be that we give it to a person with the disease and the test works correctly \(P(T|D)*P(D)\) or we give it to a person without the disease and it returns a false positive, \(P(T|!D)*P(!D)\).

So what we’ve done is to take the proportion of the population that has the disease times the probability that the test returns a positive result given they have the disease, plus the proportion of the population that doesn’t have the disease times the probability that the test returns a false positive result given they don’t have the disease.

Combining this new value for the denominator back into our original Bayes’ rule equation yields:

\[P(D|T) = \frac{P(T|D)*P(D)}{P(T)} = \frac{P(T|D)*P(D)}{P(T|D)*P(D) + P(T|!D)*P(!D)}\]

Also note that the first term in the denominator is the term in the numerator.

5.5.7 False Positive and False Negative Rates

Based on our above definitions:

- the false positive rates is \(P(T|!D)\) (the test says I have the disease but I don’t), and

- the false negative rate is \(P(!T|D)\) (the test says I don’t have the disease, but I do).

Now we can use our sum of conditional probabilities rule, namely \(P(T|D) + P(!T|D) = 1\) or \(P(T|D) = 1 - P(!T|D)\)

And so, to find \(P(T)\) all I need to know are my false positive and false negative rates, as well as the overall presence of the disease in the population.

\(P(T) = P(T|D)*P(D) + P(T|!D)*P(!D) = (1-P(!T|D))*P(D) + P(T|!D)*P(!D)\)

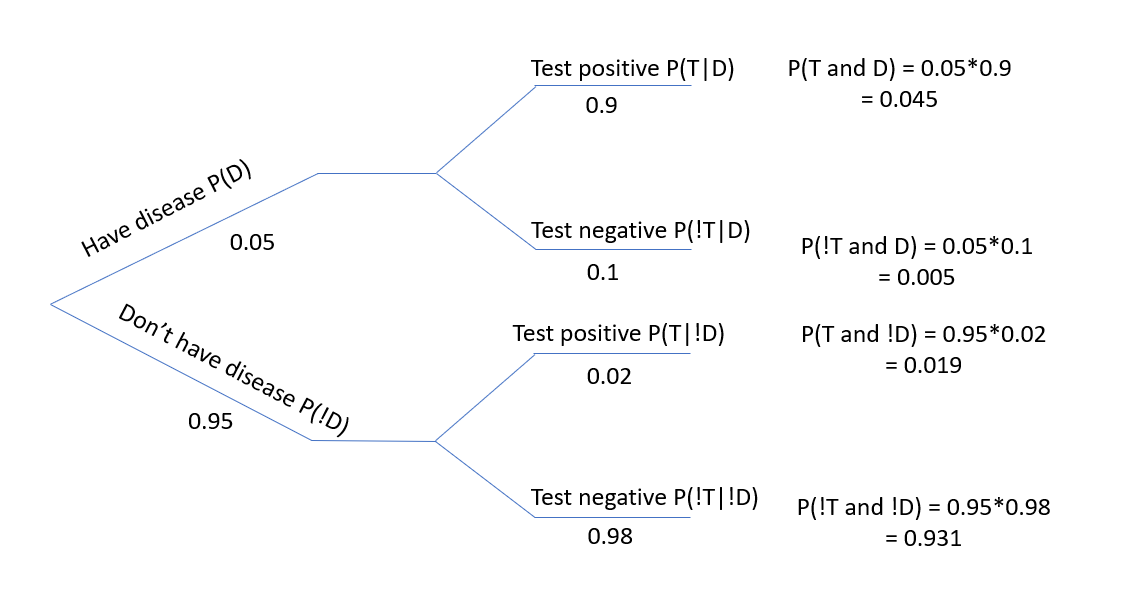

For example, now let’s assume \(P(D) = 0.05\) and our experiments tell us that \(P(T|D) = 0.9\) and \(P(T|!D) = 0.02\).

Now, what is the probability one has the disease if the test is true? Namely, calculate \(P(D|T)\).

First, let’s calculate \(P(T)= 0.9*0.05 + 0.02*0.95 = 0.064\). This is the overall probability of a test coming back positive in the general population. And be careful about whether you’re given \(P(T|D)\) or \(P(!T|D)\).

Then, putting this all together gives:

\[P(D|T) = \frac{P(T|D)*P(D)}{P(T|D)*P(D) + P(T|!D)*P(!D)} = \frac{0.9*0.05}{0.9*0.05 + 0.02*0.95} = \frac{0.045}{0.064} = 0.703\]

which is the probability of having the disease given the test was true. Again, we started with \(P(T|D)\) and now we have \(P(D|T)\).

Q: Why aren’t P(T|D) and P(T|!D) complementary? A: This is NOT the sum of conditionals.

5.5.8 2x2 Contingency Table

How would we fill out a 2x2 contingency table with the above data?

| disease | D | !D | total |

|---|---|---|---|

| T | |||

| !T | |||

| total |

Maybe it’s not as hard as we might imagine. We’ve already calculated \(P(T) = 0.064\), so we know \(P(!T)\). We were told \(P(D) = 0.05\) hence we also know \(P(!D)\) and hence know all of the marginal probabilities. We also see above that \(P(T\ and\ D) = 0.045\) and can then find all of the remaining joint probabilities through subtraction.

5.5.9 Guided Practice

Let’s split the class into thirds, work in teams and take one of these three problems. Once complete, you will get the opportunity to present your work to the class.

Your first step should be to make sure you’re explicit about your events. Then figure out what you want to know, and write out the general equation. What do you want to know? What do you know?

- You are planning a picnic today, but the morning starts out cloudy. In general this month, about 40% of the days have started out cloudy. And only 10% of the days this month have been rainy. We also have historical data from the last month and know that looking back at only those days where it rained at some point, 50% of those started out cloudy.

- What is the chance of rain today? Should we cancel?

- Suppose there are three manufacturers (\(X_1, X_2, X_3\)) of a certain product, which all produce different amounts of the product. In the overall market, 80% of the product is manufactured by company \(X_1\), 15% by company \(X_2\) and 5% by company \(X_3\). The companies also all have slightly different defect rates: \(P(D|X_1) = 0.04\), P\((D|X_2) = 0.06\), and \(P(D|X_3) = 0.09\).

- What is the probability that any given unit (random) has a defect?

- Given a defect, what is the probability it came from manufacturer \(X_1\)?

- At your favorite Sushi restaurant there are three Itamae (chefs) who switch off, we’ll call them Chefs A, B and C. Chef A prepares food 45% of the time, Chef B 30% of the time and Chef C 25% of the time. If Chef A is cooking, there’s a 90% chance the food is good. If Chef B is cooking, there’s a 75% chance the food is good. And if Chef C is cooking there’s a 50% chance the food is good. You went to the restaurant last Sat night and the food was NOT good. What is the probability that Chef B was cooking?

5.5.10 Optional: Tree Diagrams

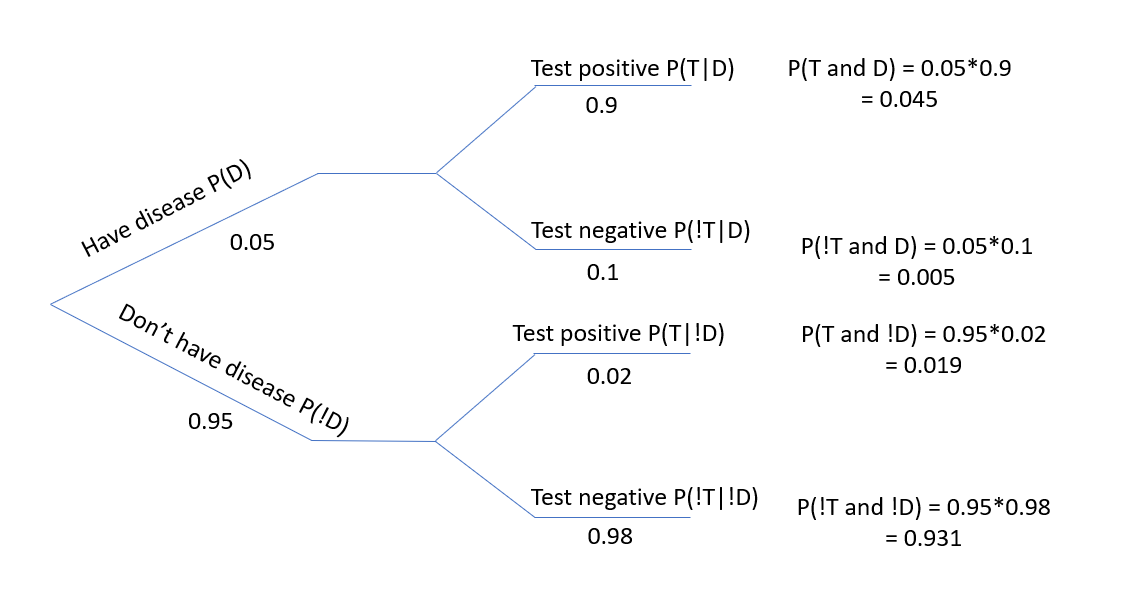

“Tree diagrams are a tool to organize outcomes and probabilities around the structure of the data” (p102). As you will see, tree diagrams are populated with conditional and marginal probabilities. And then, we can use this to compute the joint probabilities.

Let’s go back to our original disease and testing data from above.

Note we have have disease" on the upper branch and “don’t have the disease” on the lower branch.

And the critical part is to remember that \(P(T|D)\) and \(P(!T|D)\) are complementary (i.e. they sum to 1) because of the sum of conditional probabilities.

Figure 5.2: Tree diagram showing conditional probabilities.

Some people finding visualizing it in this manner makes it easier to understand and to work through what’s happening.

5.6 Review

| Name | Equation |

|---|---|

| Marginal and Joint Probabilities | \(P(A) = P(A\ and\ B) + P(A\ and\ !B)\) |

| Conditional Probability | \(P(A|B) = \frac{P(A\ and\ B)}{P(B)}\) |

| General Multiplication Rule | \(P(A\ and\ B) = P(A|B)*P(B)\) |

| Sum of Conditional Probabilities | \(P(A_1|B) + P(A_2|B) + ... P(A_n|B) = 1\) |

| Bayes Theorem | \(P(B|A) = \frac{P(A|B)P(B)}{P(A)} = \frac{P(A|B)P(B)}{P(A|B)P(B) + P(A|!B)*P(!B)}\) |

- and make sure to understand how these simplify under the assumption of independence.

5.6.1 Review of Learning Objectives

By the end of this unit you should be able to

- Create and use a contingency table based on probabilities or counts. Calculate and fill in missing values.

- Explain how joint and marginal probabilities appear in contingency tables

- Define conditional probability and give examples where it might be useful

- Use algebra to derive the relationship between joint, marginal and conditional probabilities

- Explain and use the sum of conditional probabilities rule and the general multiplication rule

- Demonstrate how to evaluate if data exhibit independence

- Define Bayes’ rule and calculate conditional probabilities using Bayes’ rule

- (Draw and use tree diagrams to represent conditional probabilities)

5.7 Exercises

Exercise 5.1 For two events, A and B, let \(P(A) = 0.3\) and \(P(B) = 0.7\)

- Can you compute \(P(A\ and\ B)\) if you only know \(P(A)\) and \(P(B)\)?

- If events A and B arise independently, what is \(P(A\ and\ B)\)?

- If events A and B arise independently, what is \(P(A\ or\ B)\)?

- If events A and B arise independently, what is \(P(A|B)\)?

Exercise 5.3 Suppose 80% of people like peanut butter, 89% like jelly, and 78% like both.

- Does it seem liking peanut butter and liking jelly are independent of one another?

- Define your events using probability notation.

- Given that a randomly sampled person likes peanut butter, what’s the probability that he/she also likes jelly? Write this in probability notation and calculate the answer.

- Given that a randomly sampled person doesn’t likes peanut butter, what’s the probability that he/she also doesn’t like jelly? Write this in probability notation and calculate the answer.

Exercise 5.4 The following table shows counts of gun ownership by state in the PNW for 399 randomly sampled people. Note the proportions of people per state are roughly the relative to the state populations.

| num of people | WA | OR | ID | MT | |

|---|---|---|---|---|---|

| don’t own a gun | 149 | 84 | 21 | 14 | |

| own one or more gun | 58 | 31 | 27 | 15 |

- Convert this table to proportions.

- Does your table suggest independence between gun ownership and state? Pick at least two cells to evaluate.

- If a randomly sampled person owns a gun, what is the probability they are from ID or MT?

- If a randomly sampled person is from OR or WA, what is the probability that they don’t own a gun?

Exercise 5.5 After an introductory statistics course, 80% of students can successfully construct box plots. Of those who can construct box plots, 86% passed, while only 65% of those students who could not construct box plots passed.

(Hint: you may find it useful to create a 2x2 table to answer this problem.)

- Define your two events, and write the each of the probabilities listed above using probability notation.

- Given that a student passed, what is the probability that she is able to construct a box plot? Write this in probability notation and calculate the answer.

- Given that a student cannot construct a box plot, what is the probability that he passed? Write this in probability notation and calculate the answer.

Exercise 5.8 Bayes rule can be used to assist with spam email filtering. Compute the probability that an email message is spam, given that certain word appears in the message. Let W be the event that a particular email has a given word. Let S be the event that a particular email is spam.

Write the equation for P(S|W) assuming you knew the probability of a spam email containing the chosen word, as well as the probability of spam and the probability of all emails containing that word.

Now, assume the word is “free”. Assume the overall probability an email is spam 0.2 and the probability that an email contains the word free is 0.12. Also assume that the 40% of spam emails contain the word “free”. What is the probability an email is spam given it contains the word “free”?

Exercise 5.13 Joe is a randomly chosen member of a large population in which 3% are heroin users. Joe tests positive for heroin in a drug test that correctly identifies users 95% of the time (aka “sensitivity”) and correctly identifies nonusers 90% of the time (aka “specificity”). Determine the probability that Joe uses heroin (= H) given the positive test result (= E),

- What are the false positive and false negative test rates? Write these both as probability statements and calculate the values.

- What is the probabilty of a positive test result? Write this both as probability statements and calculate the value.

- What is the probability that Joe uses herion given the positive test result? What do these results suggest to you?

- Bonus: Would taking the test again change the results? Why or why not?

Exercise 5.14 Suppose that 78% of the students at a particular college have a Facebook account and 43% have a Twitter account.

- Using only this information, what is the largest possible value for the percentage who have both a Facebook account and a Twitter account? Describe the (unrealistic) situation in which this occurs.

- Using only this information, what is the smallest possible value for the percentage who have both a Facebook account and a Twitter account? Describe the (unrealistic) situation in which this occurs.

Now assume that 36% of the students have both a Facebook account and a Twitter account.

- What percentage of students have at least one of these accounts?

- What percentage of students have neither of these accounts?

- What percentage of students have one of these accounts but not both?